📝 We Are Living in a Materialized World

The challenge of adding up lots of numbers in a dynamic and performant way

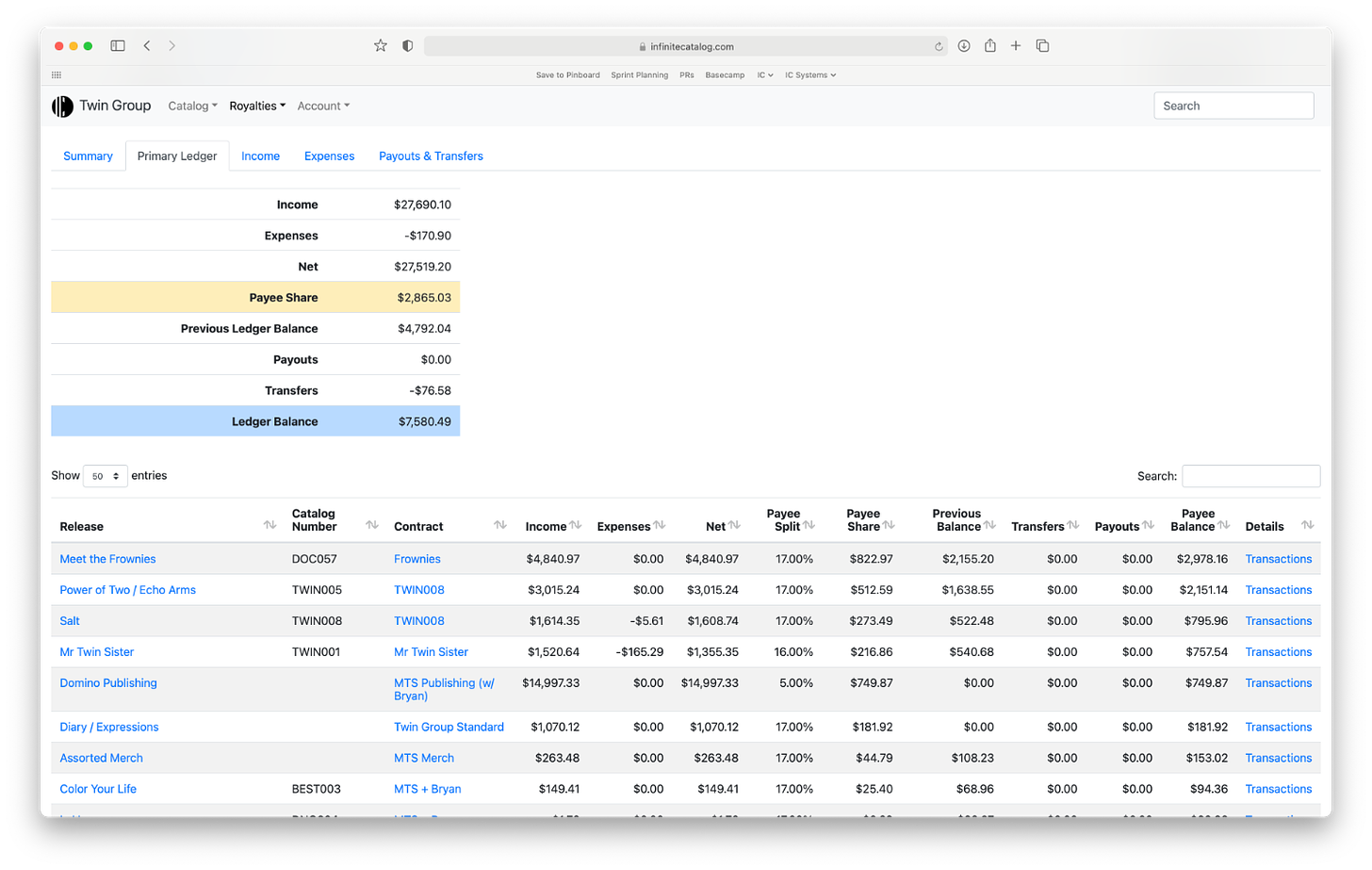

Here at IC, the main technical challenge we face is adding up lots of numbers in a dynamic and performant way. The advent of streaming means that modern royalty accounting involves millions of individual transactions, and even the most optimized database queries start suffering from performance issues. We’ve spent the last couple of months building architecture to handle this issue, and I’m excited to share what we’ve come up with. It relies on two main pieces: materialized views in our PostgreSQL database, and leveraging a change data capture event stream in Kafka to trigger refreshing those materialized views.

The heart of our software is what we call a payee summary, a report which calculates how much a payee in our system is owed along with a breakdown of how we got to that number. This report is generated using a fairly complex and hand-optimized SQL query. Traditionally, royalty accounting is done a round at a time, with the final balance carried over and used as the starting point for the next round. This can make changes and corrections to previously closed rounds a painful affair, and if there’s one thing we’ve learned in our time doing royalties it’s that there are always changes. To avoid this, our payee summaries are calculated using every transaction from the beginning of an account’s history, no matter which round we’re reporting on. This makes corrections a breeze, but the price we pay is that as an account’s transaction history grows, our performance suffers.

Our initial attempts at mitigating these performance issues were fairly vanilla; caching the result of the query, and invalidating it whenever anything changed in an account. This worked out ok for a while, but had a couple of key downsides — slow first time responses, and small changes to an account requiring recalculations of every single payee summary. So we started rethinking our approach with a lot of help from Martin Kleppman’s invaluable book Designing Data-Intensive Applications. We came to the conclusion that we could break up our single large query into individual pieces that could be cached and refreshed separately. The most expensive piece, aggregated transaction sums, don’t change that often, mostly when users import new data. The other more dynamic pieces, like payee splits or which contract to use for a set of transactions, are fairly cheap in terms of performance cost.

The first decision to make was how to store and cache the individual pieces. After some experiments with caching JSON and data frames in Redis, we settled on PostgreSQL materialized views. If you’re not familiar, materialized views persist the results of a query in a table-like form. The advantage we saw in using them over something like JSON or data frames is that we get all the power of declarative SQL with its joins and filters when stitching the individual pieces together, and PostgreSQL is a piece of our stack we know and understand well. Next up was figuring out the best way to keep these materialized views up to date.

There are only two hard things in Computer Science: cache invalidation and naming things.

Phil Karlton

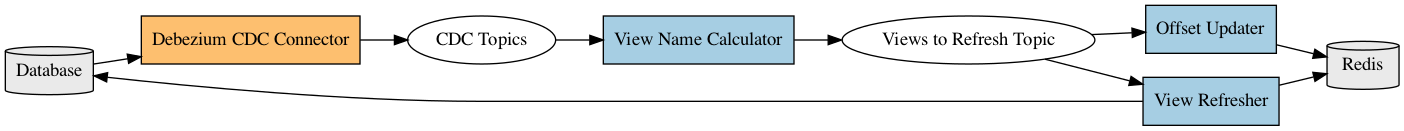

We’d already been experimenting with using Apache Kafka and Debezium to stream changes in our database to other parts of our system, and were really impressed with the throughput and reliability. We decided to use change data capture as our cache invalidation mechanism. What we ended up with looks something like this:

Let’s walk through the above diagram in more detail.

- Debezium picks up changes from our database and writes them to a stream for each table, or what Kafka calls topics.

- A View Name Calculator consumer subscribes to all of the relevant CDC topics, reads messages as they come in and determines which views will need to be refreshed. It writes messages with the views that need to be refreshed to a new topic in Kafka.

- Every message in Kafka has an offset, an incrementing integer. Our Offset Updater service has a very simple function; it reads “Views to Refresh” messages and stores the latest message offset for each view in Redis as

{view_name}_latest_offset. This number essentially acts as a marker of what our system understands as being “up to date” for an individual view. - The second consumer, View Refresher is doing the real heavy lifting. Every time it receives a message, it refreshes the materialized view referenced in the message, and once its done, caches the message offset in Redis as

{view_name}_refreshed_offset.

Now when we receive a request to our application for a given payee summary, we check all the materialized views required for that summary. If the value of {view_name}_refreshed_offset is less than {view_name}_latest_offset for any of the views in Redis, we know that the data is stale, and return a message to the user that changes are being synced. Otherwise, we know its safe to use the views in making our calculations, and run our final query that combines the various materialized views into the payee summary.

So now, we’re doing all the heavy lifting as the writes happen, not when a payee summary is requested, and we only do the work we know we have to do, instead of starting from zero every time. We’ve been testing the new setup in production for a couple of months and have beeen live for a few weeks, and response times for our payee summaries have improved by several orders of magnitude!